Of the many frustrations of having a severe motor impairment, the difficulty of communicating must surely be among the worst. The tech world has not offered much succor to those affected by things like locked-in syndrome, ALS, and severe strokes, but startup Cognixion aims to with a novel form of brain monitoring that, combined with a modern interface, could make speaking and interaction far simpler and faster.

The company’s One headset tracks brain activity closely in such a way that the wearer can direct a cursor — reflected on a visor like a heads-up display — in multiple directions or select from various menus and options. No physical movement is needed, and with the help of modern voice interfaces like Alexa, the user can not only communicate efficiently but freely access all kinds of information and content most people take for granted.

But it’s not a miracle machine, and it isn’t a silver bullet. Here’s where how it got started.

Overhauling decades-old brain tech

Everyone with a motor impairment has different needs and capabilities, and there are a variety of assistive technologies that cater to many of these needs. But many of these techs and interfaces are years or decades old — medical equipment that hasn’t been updated for an era of smartphones and high-speed mobile connections.

Some of the most dated interfaces, unfortunately, are those used by people with the most serious limitations: those whose movements are limited to their heads, faces, eyes — or even a single eyelid, like Jean-Dominique Bauby, the famous author of “The Diving Bell and the Butterfly.”

One of the tools in the toolbox is the electroencephalogram, or EEG, which involves detecting activity in the brain via patches on the scalp that record electrical signals. But while they’re useful in medicine and research in many ways, EEGs are noisy and imprecise — more for finding which areas of the brain are active than, say, which sub-region of the sensory cortex or the like. And of course you have to wear a shower cap wired with electrodes (often greasy with conductive gel) — it’s not the kind of thing anyone wants to do for more than an hour, let alone all day every day.

Yet even among those with the most profound physical disabilities, cognition is often unimpaired — as indeed EEG studies have helped demonstrate. It made Andreas Forsland, co-founder and CEO of Cognixion, curious about further possibilities for the venerable technology: “Could a brain-computer interface using EEG be a viable communication system?”

He first used EEG for assistive purposes in a research study some five years ago. They were looking into alternative methods of letting a person control an on-screen cursor, among them an accelerometer for detecting head movements, and tried integrating EEG readings as another signal. But it was far from a breakthrough.

A modern lab with an EEG cap wired to a receiver and laptop – this is an example of how EEG is commonly used.

He ran down the difficulties: “With a read-only system, the way EEG is used today is no good; other headsets have slow sample rates and they’re not accurate enough for a real-time interface. The best BCIs are in a lab, connected to wet electrodes — it’s messy, it’s really a non-starter. So how do we replicate that with dry, passive electrodes? We’re trying to solve some very hard engineering problems here.”

The limitations, Forsland and his colleagues found, were not so much with the EEG itself as with the way it was carried out. This type of brain monitoring is meant for diagnosis and study, not real-time feedback. It would be like taking a tractor to a drag race. Not only do EEGs often work with a slow, thorough check of multiple regions of the brain that may last several seconds, but the signal it produces is analyzed by dated statistical methods. So Cognixion started by questioning both practices.

Improving the speed of the scan is more complicated than overclocking the sensors or something. Activity in the brain must be inferred by collecting a certain amount of data. But that data is collected passively, so Forsland tried bringing an active element into it: a rhythmic electric stimulation that is in a way reflected by the brain region, but changed slightly depending on its state — almost like echolocation.

They detect these signals with a custom set of six EEG channels in the visual cortex area (up and around the back of your head), and use a machine learning model to interpret the incoming data. Running a convolutional neural network locally on an iPhone — something that wasn’t really possible a couple years ago — the system can not only tease out a signal in short order but make accurate predictions, making for faster and smoother interactions.

The result is sub-second latency with 95-100 percent accuracy in a wireless headset powered by a mobile phone. “The speed, accuracy and reliability are getting to commercial levels — we can match the best in class of the current paradigm of EEGs,” said Forsland.

Dr. William Goldie, a clinical neurologist who has used and studied EEGs and other brain monitoring techniques for decades (and who has been voluntarily helping Cognixion develop and test the headset), offered a positive evaluation of the technology.

“There’s absolutely evidence that brainwave activity responds to thinking patterns in predictable ways,” he noted. This type of stimulation and response was studied years ago. “It was fascinating, but back then it was sort of in the mystery magic world. Now it’s resurfacing with these special techniques and the computerization we have these days. To me it’s an area that’s opening up in a manner that I think clinically could be dramatically effective.”

BCI, meet UI

The first thing Forsland told me was “We’re a UI company.” And indeed even such a step forward in neural interfaces as he later described means little if it can’t be applied to the problem at hand: helping people with severe motor impairment to express themselves quickly and easily.

Sad to say, it’s not hard to imagine improving on the “competition,” things like puff-and-blow tubes and switches that let users laboriously move a cursor right, right a little more, up, up a little more, then click: a letter! Gaze detection is of course a big improvement over this, but it’s not always an option (eyes don’t always work as well as one would like) and the best eye-tracking solutions (like a Tobii Dynavox tablet) aren’t portable.

Why shouldn’t these interfaces be as modern and fluid as any other? The team set about making a UI with this and the capabilities of their next-generation EEG in mind.

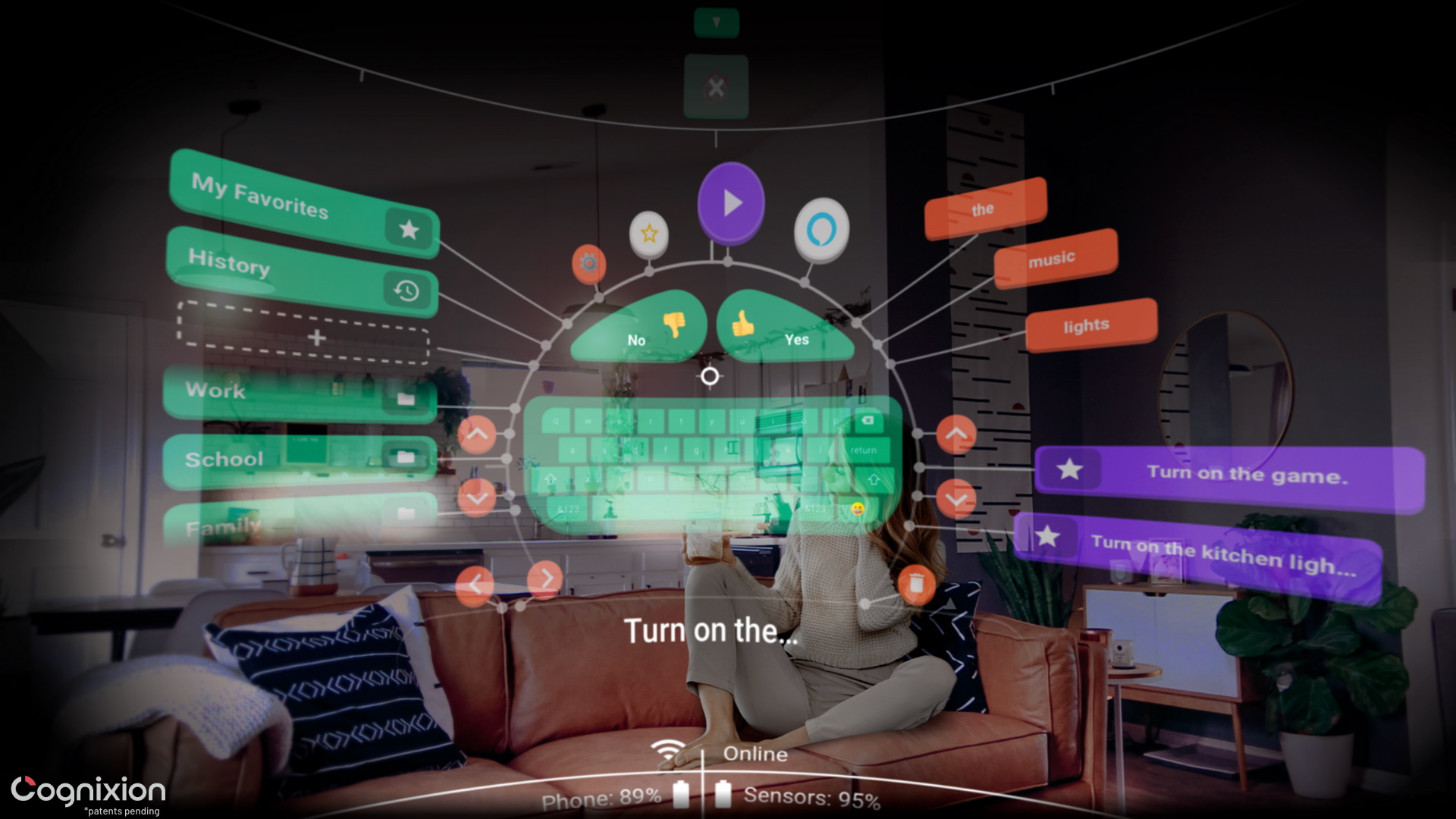

Their solution takes bits from the old paradigm and combines them with modern virtual assistants and a radial design that prioritizes quick responses and common needs. It all runs in an app on an iPhone, the display of which is reflected in a visor, acting as a HUD and outward-facing display.

In easy reach of, not to say a single thought but at least a moment’s concentration or a tilt of the head, are everyday questions and responses — yes, no, thank you, etc. Then there are slots to put prepared speech into — names, menu orders, and so on. And then there’s a keyboard with word- and sentence-level prediction that allows common words to be popped in without spelling them out.

“We’ve tested the system with people who rely on switches, who might take 30 minutes to make 2 selections. We put the headset on a person with cerebral palsy, and she typed our her name and hit play in 2 minutes,” Forsland said. “It was ridiculous, everyone was crying.”

Goldie noted that there’s something of a learning curve. “When I put it on, I found that it would recognize patterns and follow through on them, but it also sort of taught patterns to me. You’re training the system, and it’s training you — it’s a feedback loop.”

“I can be the loudest person in the room”

Post a Comment for "Cognixion’s brain-monitoring headset enables fluid communication for people with severe disabilities"